通用方法:贝叶斯优化LSTM超参数

优采云 发布时间: 2022-11-14 23:17通用方法:贝叶斯优化LSTM超参数

1. 摘要

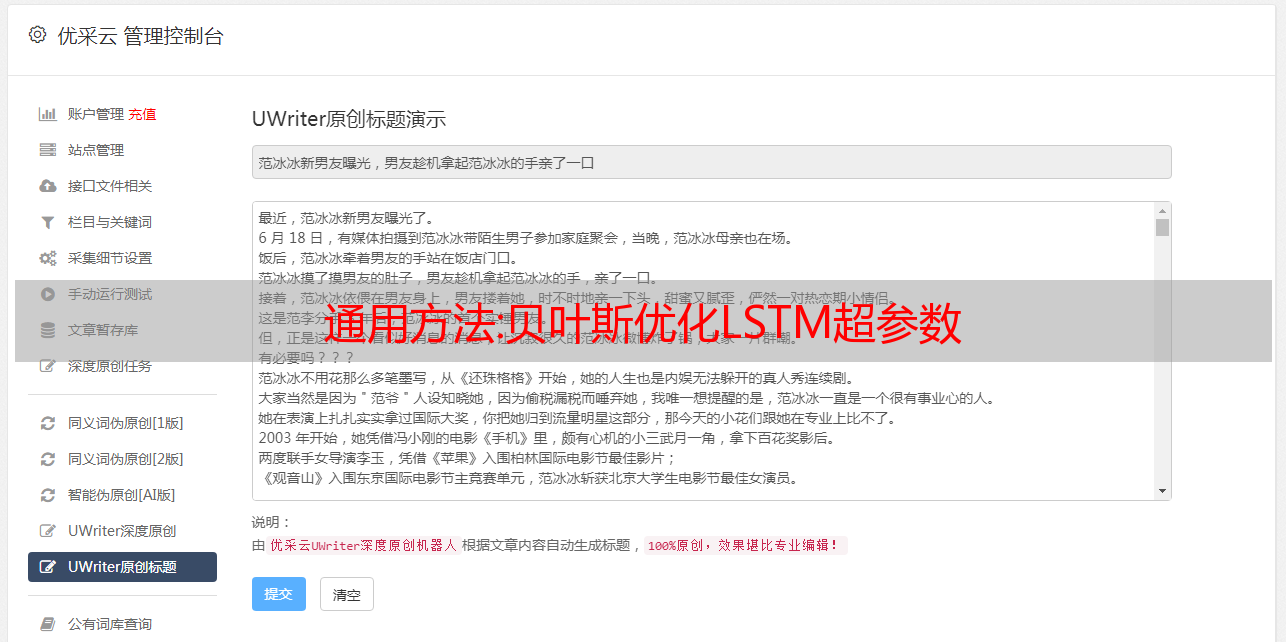

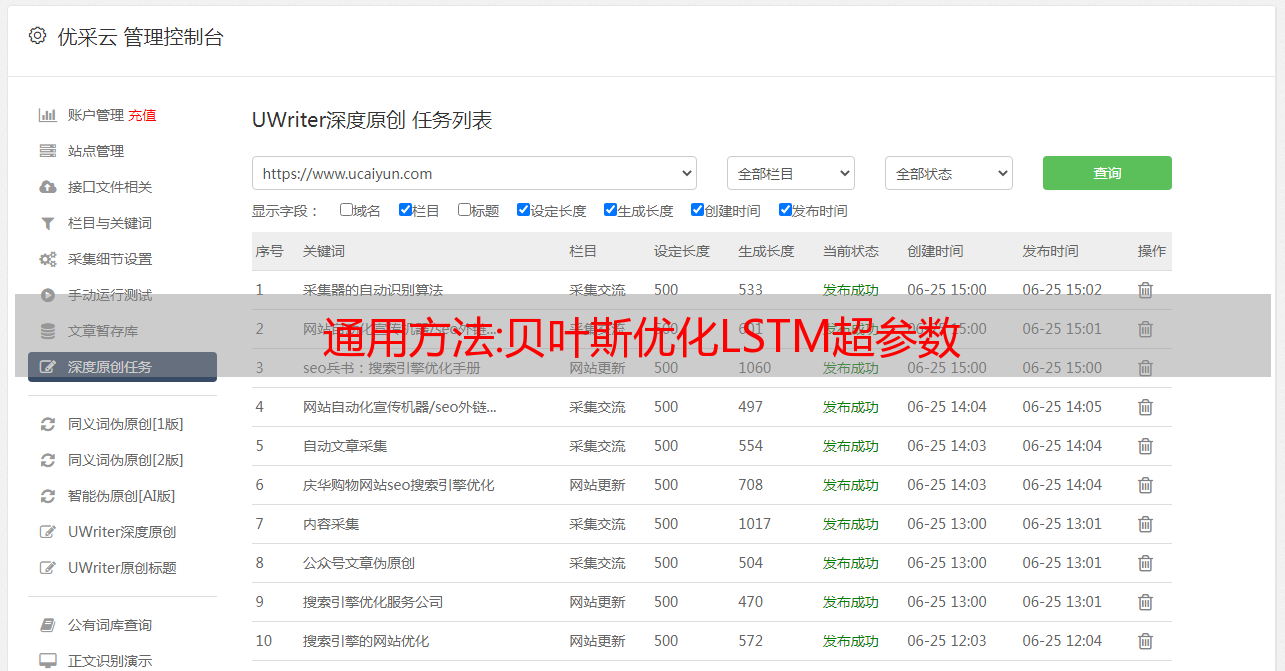

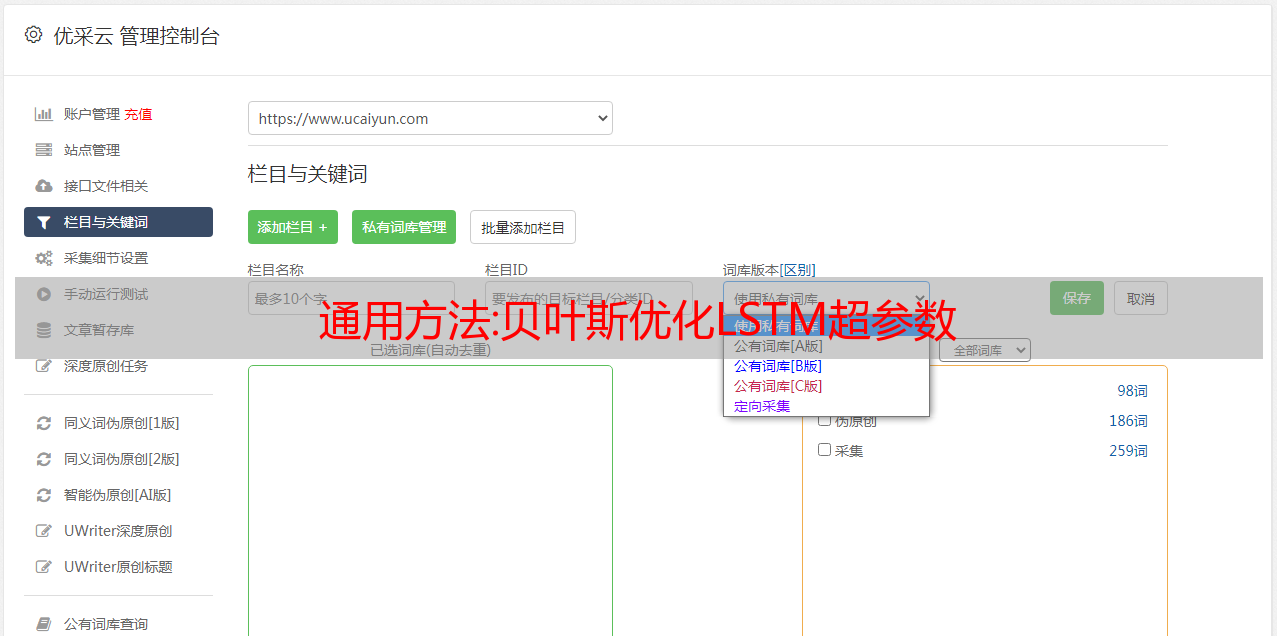

本文主要讲解:使用贝叶斯优化LSTM超参数

大意:

下载 IMDB 数据集并将其转换为序列数据以构建 LSTM 模型并训练以绘制准确度和损失曲线。图 2. 数据介绍

IMDB 数据集简介

IMDB 数据集收录来自 Internet 电影数据库 (IMDB) 的 50,000 条两极分化的评论。数据集分为 25,000 条训练评论和 25,000 条测试评论,训练集和测试集均收录 50% 的正面评论和 50% 的负面评论。

train_labels 和 test_labels 都是 0 和 1 的列表,其中 0 代表负数,1 代表正数

三、相关技术

下面是贝叶斯优化过程的图解:

为了得到这个函数的最小值,贝叶斯优化是这样的

基于构造的代理函数,我们可以在可能是最小值的点周围采集更多点,或者在未采样区域中采集更多点,有了更多点,代理函数可以进行更新,使其更接近真实目标函数的形状,从而更容易找到目标函数的最小值。这个采样过程也可以通过构造一个 采集 函数来表示。就是要知道当前代理函数的形状以及如何选择下一个x来最大化利润。

4.完整的代码和步骤

首先安装贝叶斯优化依赖

pip 安装贝叶斯优化

主要赛跑者进入

import matplotlib.pyplot as plt

import os

from bayes_opt import BayesianOptimization

from keras.callbacks import EarlyStopping, ModelCheckpoint

from keras.datasets import imdb

from keras.layers import Dense, Embedding, Dropout, LSTM

from keras.models import Sequential

from keras.preprocessing import sequence

def prepare_data(max_features, max_length):

(x_train, y_train), (x_val, y_val) = imdb.load_data(path="imdb.npz",

num_words=max_features,

skip_top=0,

maxlen=None,

seed=113,

start_char=1,

oov_char=2,

<p>

index_from=3)

x_train = sequence.pad_sequences(x_train, maxlen=max_length)

x_val = sequence.pad_sequences(x_val, maxlen=max_length)

return (x_train, y_train), (x_val, y_val)

def build_and_evaluate(data, max_features, dropout=0.2, lstm_units=32, fc_hidden=128, lr=3e-4, verbose=False):

(x_train, y_train), (x_val, y_val) = data

model = Sequential()

model.add(Embedding(input_dim=max_features, output_dim=lstm_units, input_length=x_train.shape[1]))

model.add(LSTM(lstm_units))

model.add(Dense(units=fc_hidden, activation='relu'))

model.add(Dropout(dropout))

model.add(Dense(units=1, activation='sigmoid'))

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

history = model.fit(x_train, y_train, validation_data=(x_val, y_val), epochs=100,

batch_size=512, verbose=verbose,

callbacks=[EarlyStopping(monitor='val_loss', patience=5, baseline=None),

ModelCheckpoint(model_dir + '/best_model.h5', monitor='val_loss',

save_best_only=True)])

plot_history(history)

return history.history['val_acc'][-1]

def plot_history(history):

# summarize history for accuracy

plt.plot(history.history['acc'])

plt.plot(history.history['val_acc'])

plt.title('model accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

# summarize history for loss

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('model loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

def overfit_batch(sample_size=2000):

max_features = 5000

(x_train, y_train), (x_val, y_val) = prepare_data(max_features=max_features, max_length=10)

data = (x_train[:sample_size], y_train[:sample_size]), (x_val[:sample_size], y_val[:sample_size])

history = build_and_evaluate(data, max_features, verbose=True)

plot_history(history)

return history.history['val_acc'][-1]

def bayesian_opt(data, max_features):

optimizer = BayesianOptimization(

f=build_and_evaluate(data, max_features),

pbounds={'dropout': (0.0, 0.5), 'lstm_units': (32, 500), 'fc_hidden': (32, 256)},

)

optimizer.maximize(

init_points=10,

n_iter=30,

)

model_dir = 'models'

if not os.path.exists(model_dir):

os.makedirs(model_dir)

# overfit_batch()

bayesian_opt(prepare_data(max_features=5000, max_length=500), 5000)

</p>

操作方法:SPA游标采集之去除重复

我们在做数据库升级项目时,通常会做性能回归测试。通俗的讲就是在11g生产环境上运行10g生产库的语句。导致执行计划发生变化并最终导致性能下降的语句需要单独分析和验证。去做这个

我们在做数据库升级项目时,通常会做性能回归测试。通俗的讲就是在11g生产环境上运行10g生产库的语句。导致执行计划发生变化并最终导致性能下降的语句需要单独分析和验证。为此,首先我们需要 采集 输出 10g 上的语句。采集 方法分为以下几种方式。

对于大型生产库,我们一般的 采集 方法是:来自 awr 快照的游标和数据。为了完美捕获所有 SQL 语句,我们经常需要每天多次对游标缓存执行 采集。大部分建议是放在高峰期采集,这主要是为了防止一些SQL在被sqlset捕获之前就被从共享池中清除了。在这个爬取的过程中,一个比较麻烦的问题是一些文字sql的语句。一个例子如下:

select * from emp where empno=1456;

select * from emp where empno=1457;

select * from emp where empno=1458;

这三个SQL语句会一个接一个的被采集输入,每天采集都会导致我们SQLSET的结果集越来越大。一般情况下,大型生产库的SQL语句只有几个w,但如果你的硬解析很多,你的采集语句可能在短短几天内就超过100w。以上。然后,SQLSET 转换为中转表的过程会执行很长时间。如果不好,会报ORA-01555,运行一段时间会导致转换失败。我被骗了几次。也许你会说,100w 数据,Oracle 应该尽快转换。我必须解释一下。我们的传输表实际上收录了几个 LOB 字段和特殊的 TYPE 类型。一旦数据量很大,可以说速度是完全无效的。正是出于这个原因,我们需要在采集的过程中考虑一种去重的方法。

我们举个例子来说明。

1.新的SQLSET

SQL> exec dbms_sqltune.CREATE_SQLSET('sqlset1');

PL/SQL procedure successfully completed.

SQL> select * from dba_sqlset;

ID NAME OWNER DESCRIPTION CREATED LAST_MODI STATEMENT_COUNT

---------- --------------- --------------- ------------------------------ --------- --------- ---------------

1 sqlset1 SYS 11-MAY-14 11-MAY-14 0

2.使用scott用户,执行几条语句,执行前flush共享池

SQL> alter system flush shared_pool;

System altered.

connect scott/tiger

select * from emp;

select * from emp where empno=1456;

select * from emp where empno=1457;

3. 以 sys 用户启动 采集 语句

DECLARE

mycur DBMS_SQLTUNE.SQLSET_CURSOR;

BEGIN

OPEN mycur FOR

SELECT value(P)

FROM TABLE(dbms_sqltune.select_cursor_cache('parsing_schema_name in (''SCOTT'')',

NULL,

NULL,

NULL,

NULL,

1,

NULL,

'ALL')) p;

dbms_sqltune.load_sqlset(sqlset_name => 'sqlset1',

populate_cursor => mycur,

load_option => 'MERGE');

CLOSE mycur;

END;

/

SQL> select * from dba_sqlset;

ID NAME OWNER DESCRIPTION CREATED LAST_MODI STATEMENT_COUNT

---------- --------------- --------------- ------------------------------ --------- --------- ---------------

1 sqlset1 SYS 11-MAY-14 11-MAY-14 9

4. 查看 采集 结果

SQL> select sql_id,sql_text from DBA_SQLSET_STATEMENTS ;

SQL_ID SQL_TEXT

------------- --------------------------------------------------------------------------------

1srhq04p4x0zz SELECT /* OPT_DYN_SAMP */ /*+ ALL_ROWS IGNORE_WHERE_CLAUSE NO_PARALLEL(SAMPLESUB

38mhtu5pc7d07 select * from emp where empno=1456

7hys3h7ysgf9m SELECT ATTRIBUTE,SCOPE,NUMERIC_VALUE,CHAR_VALUE,DATE_VALUE FROM SYSTEM.PRODUCT_P

a2dk8bdn0ujx7 select * from emp

bc26hcc8td76f select * from emp where empno=1457

<p>

cw6vxf0kbz3v1 SELECT CHAR_VALUE FROM SYSTEM.PRODUCT_PRIVS WHERE (UPPER('SQL*Plus') LIKE UPPE

d6vwqbw6r2ffk SELECT USER FROM DUAL

dyk4dprp70d74 SELECT DECODE('A','A','1','2') FROM DUAL

g4y6nw3tts7cc BEGIN DBMS_APPLICATION_INFO.SET_MODULE(:1,NULL); END;</p>

从这里我们可以观察到我们的三个语句都是采集进来的。这里我们可以看到我们的文字sql,如果每天游标多次采集,我们的文字sql会越来越多采集,导致SQLSET的结果集非常大。当 SQL 的数量达到数百万时,它使我们的转换变得非常缓慢。如何减肥?我们来看看这个 DBA_SQLSET_STATEMENTS 的结构。

SQL> desc DBA_SQLSET_STATEMENTS

Name Null? Type

------------------------------------------- -------- -----------------------------

SQLSET_NAME NOT NULL VARCHAR2(30)

SQLSET_OWNER VARCHAR2(30)

SQLSET_ID NOT NULL NUMBER

SQL_ID NOT NULL VARCHAR2(13)

FORCE_MATCHING_SIGNATURE NOT NULL NUMBER

SQL_TEXT CLOB

PARSING_SCHEMA_NAME VARCHAR2(30)

PARSING_SCHEMA_ID NUMBER

PLAN_HASH_VALUE NOT NULL NUMBER

BIND_DATA RAW(2000)

BINDS_CAPTURED CHAR(1)

MODULE VARCHAR2(64)

ACTION VARCHAR2(64)

ELAPSED_TIME NUMBER

CPU_TIME NUMBER

BUFFER_GETS NUMBER

DISK_READS NUMBER

DIRECT_WRITES NUMBER

ROWS_PROCESSED NUMBER

FETCHES NUMBER

EXECUTIONS NUMBER

END_OF_FETCH_COUNT NUMBER

OPTIMIZER_COST NUMBER

OPTIMIZER_ENV RAW(2000)

PRIORITY NUMBER

COMMAND_TYPE NUMBER

FIRST_LOAD_TIME VARCHAR2(19)

STAT_PERIOD NUMBER

ACTIVE_STAT_PERIOD NUMBER

OTHER CLOB

PLAN_TIMESTAMP DATE

SQL_SEQ NOT NULL NUMBER

SQL> select sql_id,sql_text,FORCE_MATCHING_SIGNATURE from DBA_SQLSET_STATEMENTS;

SQL_ID SQL_TEXT FORCE_MATCHING_SIGNATURE

------------- -------------------------------------------------------------------------------- ---------------------------

1srhq04p4x0zz SELECT /* OPT_DYN_SAMP */ /*+ ALL_ROWS IGNORE_WHERE_CLAUSE NO_PARALLEL(SAMPLESUB 4094562552765466770

38mhtu5pc7d07 select * from emp where empno=1456 16946033956547040230

7hys3h7ysgf9m SELECT ATTRIBUTE,SCOPE,NUMERIC_VALUE,CHAR_VALUE,DATE_VALUE FROM SYSTEM.PRODUCT_P 10967007256268736959

a2dk8bdn0ujx7 select * from emp 7001777653489406494

bc26hcc8td76f select * from emp where empno=1457 16946033956547040230

cw6vxf0kbz3v1 SELECT CHAR_VALUE FROM SYSTEM.PRODUCT_PRIVS WHERE (UPPER('SQL*Plus') LIKE UPPE 18201431879876406267

d6vwqbw6r2ffk SELECT USER FROM DUAL 17376422952071979402

dyk4dprp70d74 SELECT DECODE('A','A','1','2') FROM DUAL 1846728577492307645

g4y6nw3tts7cc BEGIN DBMS_APPLICATION_INFO.SET_MODULE(:1,NULL); END; 0

这里我们主要使用 FORCE_MATCHING_SIGNATURE 字段。可以看到我们文字sql的FORCE_MATCHING_SIGNATURE的值是一样的。这里是 547040230。所以我们需要区分这一列,并将不同的值放在自定义表中。

5. 去重采集

SQL> create table spaqc as select distinct FORCE_MATCHING_SIGNATURE from DBA_SQLSET_STATEMENTS;

Table created.

<p>

SQL> select * from spaqc;

FORCE_MATCHING_SIGNATURE

---------------------------

18201431879876406267

1846728577492307645

4094562552765466770

17376422952071979402

10967007256268736959

7001777653489406494

16946033956547040230

8 rows selected.</p>

这里需要注意的是,当FORCE_MATCHING_SIGNATURE为0时,一般会运行PL/SQL、JOB等操作,我们无法过滤掉。所以我们必须删除第 0 行。

SQL> delete from spaqc where FORCE_MATCHING_SIGNATURE=0;

1 row deleted.

SQL> commit;

Commit complete.

6. 再次测试看看文字sql是否为采集。

select * from emp where empno=1458;

select * from emp where empno=1459;

select * from emp where empno=1460;

select * from emp where empno=1460 and ENAME='scott';

DECLARE

mycur DBMS_SQLTUNE.SQLSET_CURSOR;

BEGIN

OPEN mycur FOR

SELECT value(P)

FROM TABLE(dbms_sqltune.select_cursor_cache('parsing_schema_name in (''SCOTT'') and FORCE_MATCHING_SIGNATURE not in (select FORCE_MATCHING_SIGNATURE from spaqc)',

NULL,

NULL,

NULL,

NULL,

1,

NULL,

'ALL')) p;

dbms_sqltune.load_sqlset(sqlset_name => 'sqlset1',

populate_cursor => mycur,

load_option => 'MERGE');

CLOSE mycur;

END;

/

SQL> select sql_id,sql_text,FORCE_MATCHING_SIGNATURE from DBA_SQLSET_STATEMENTS ;

SQL_ID SQL_TEXT FORCE_MATCHING_SIGNATURE

------------- -------------------------------------------------------------------------------- ---------------------------

1srhq04p4x0zz SELECT /* OPT_DYN_SAMP */ /*+ ALL_ROWS IGNORE_WHERE_CLAUSE NO_PARALLEL(SAMPLESUB 4094562552765466770

38mhtu5pc7d07 select * from emp where empno=1456 16946033956547040230

7hys3h7ysgf9m SELECT ATTRIBUTE,SCOPE,NUMERIC_VALUE,CHAR_VALUE,DATE_VALUE FROM SYSTEM.PRODUCT_P 10967007256268736959

a2dk8bdn0ujx7 select * from emp 7001777653489406494

bc26hcc8td76f select * from emp where empno=1457 16946033956547040230

cw6vxf0kbz3v1 SELECT CHAR_VALUE FROM SYSTEM.PRODUCT_PRIVS WHERE (UPPER('SQL*Plus') LIKE UPPE 18201431879876406267

d6vwqbw6r2ffk SELECT USER FROM DUAL 17376422952071979402

d8fw5smyjva0b select * from emp where empno=1460 and ENAME='scott' 17445701640293030006

dyk4dprp70d74 SELECT DECODE('A','A','1','2') FROM DUAL 1846728577492307645

g4y6nw3tts7cc BEGIN DBMS_APPLICATION_INFO.SET_MODULE(:1,NULL); END; 0

10 rows selected.

这里我们看到采集没有输入文字sql,我们实现了游标采集的过滤。