php如何抓取网页数据库(使用scrapy来抓取数据创建项目配置信息制作信息)

优采云 发布时间: 2022-03-14 06:08php如何抓取网页数据库(使用scrapy来抓取数据创建项目配置信息制作信息)

简介

OMIM的全称是Online Mendelian Inheritance in Man,是一个不断更新的人类孟德尔遗传病数据库,重点关注人类遗传变异与表型性状的关系。

OMIM官网网址为:

呲牙

OMIM的注册用户可以下载或使用API获取数据。这里我们尝试使用爬虫来爬取Phenotype-Gene Relationships数据。

使用scrapy创建项目来抓取数据

scrapy startproject omimScrapy

cd omimScrapy

scrapy genspider omim omim.org

配置物品信息

import scrapy

class OmimscrapyItem(scrapy.Item):

# define the fields for your item here like:

geneSymbol = scrapy.Field()

mimNumber = scrapy.Field()

location = scrapy.Field()

phenotype = scrapy.Field()

phenotypeMimNumber = scrapy.Field()

nheritance = scrapy.Field()

mappingKey = scrapy.Field()

descriptionFold = scrapy.Field()

diagnosisFold = scrapy.Field()

inheritanceFold = scrapy.Field()

populationGeneticsFold = scrapy.Field()

做一个爬虫

我们依次抓取文件mim2gene.txt的内容,所以需要解析文件。

'''

解析omim mim2gene.txt的文件

'''

def readMim2Gene(self,filename):

filelist = []

with open(filename,"r") as f:

for line in f.readlines():

tempList = []

strs = line.split()

mimNumber = strs[0]

mimEntryType = strs[1]

geneSymbol = "."

if(len(strs)>=4):

geneSymbol = strs[3]

if(mimEntryType in ["gene","gene/phenotype"]):

tempList.append(mimNumber)

tempList.append(mimEntryType)

tempList.append(geneSymbol)

filelist.append(tempList)

return filelist

解析文件后,需要动态生成爬虫爬取的入口。我们需要通过 start_requests 方法动态生成抓取的 url。然后根据url爬取对应的内容。

注意:此阶段可以同时解析html内容,提取需要的内容,也可以先保存html内容,供后续统一处理。抓取到的html内容这里不解析,而是直接将html内容保存为html文件。文件名以mimNumber命名,后缀为.html。

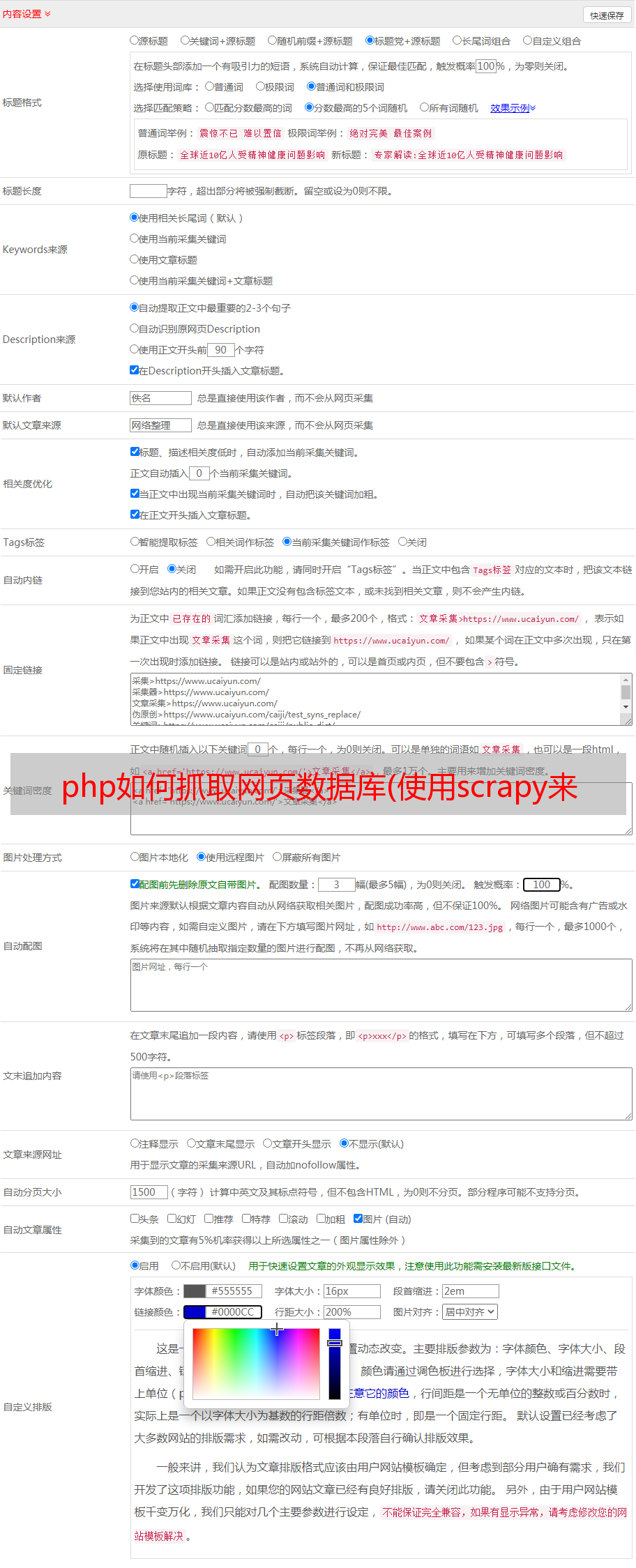

爬虫设置

OMIM robots.txt 设置了爬虫策略,只允许微软 Bingbot 和谷歌 googlebot 爬虫获取指定路径的内容。主要注意几个方面的配置。

BOT_NAME = 'bingbot'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = 'bingbot (+https://www.bing.com/bingbot.htm)'

# Configure a delay for requests for the same website (default: 0)

DOWNLOAD_DELAY = 4

# Disable cookies (enabled by default)

COOKIES_ENABLED = False

执行

然后就可以进行爬取操作了。这个过程比较慢,估计要一天。之后,所有的html页面都保存为本地html页面。

scrapy crawl omim

后续提取

基于本地html的提取操作非常简单,可以使用BeautifulSoup进行提取。提取的核心操作如下:

'''

解析Phenotype-Gene Relationships表格

'''

def parseHtmlTable(html):

soup = BeautifulSoup(html,"html.parser")

table = soup.table

location,phenotype,mimNumber,nheritance,mappingKey,descriptionFold,diagnosisFold,inheritanceFold,populationGeneticsFold="","","","","","","","",""

if not table:

result = "ERROR"

else:

result = "SUCCESS"

trs = table.find_all('tr')

for tr in trs:

tds = tr.find_all('td')

if len(tds)==0:

continue

elif len(tds)==4:

phenotype = phenotype + "|" + (tds[0].get_text().strip() if tds[0].get_text().strip()!='' else '.' )

mimNumber = mimNumber + "|" + (tds[1].get_text().strip() if tds[1].get_text().strip()!='' else '.')

nheritance = nheritance + "|" + (tds[2].get_text().strip() if tds[2].get_text().strip()!='' else '.')

mappingKey = mappingKey + "|" + (tds[3].get_text().strip() if tds[3].get_text().strip()!='' else '.')

elif len(tds)==5:

location = tds[0].get_text().strip() if tds[0].get_text().strip()!='' else '.'

phenotype = tds[1].get_text().strip() if tds[1].get_text().strip()!='' else '.'

mimNumber = tds[2].get_text().strip() if tds[2].get_text().strip()!='' else '.'

nheritance = tds[3].get_text().strip() if tds[3].get_text().strip()!='' else '.'

mappingKey = tds[4].get_text().strip() if tds[4].get_text().strip()!='' else '.'

else:

result = "ERROR"

descriptionFoldList = soup.select("#descriptionFold")

descriptionFold = "." if len(descriptionFoldList)==0 else descriptionFoldList[0].get_text().strip()

diagnosisFoldList = soup.select("#diagnosisFold")

diagnosisFold = "." if len(diagnosisFoldList)==0 else diagnosisFoldList[0].get_text().strip()

inheritanceFoldList = soup.select("#inheritanceFold")

inheritanceFold = "." if len(inheritanceFoldList)==0 else inheritanceFoldList[0].get_text().strip()

populationGeneticsFoldList = soup.select("#populationGeneticsFold")

populationGeneticsFold = "." if len(populationGeneticsFoldList)==0 else populationGeneticsFoldList[0].get_text().strip()

至于最终的格式,就看个人需求了。