c 抓取网页数据(问题:在使用正在表达式来定位tags的时候,能不能使用多条件的? )

优采云 发布时间: 2022-01-22 23:01c 抓取网页数据(问题:在使用正在表达式来定位tags的时候,能不能使用多条件的?

)

问题:使用表达式定位标签时可以使用多个条件吗?

答案是肯定的,而且使用起来非常方便,会大大提高工作效率。

举例:我现在要爬去寺库的包袋的网页链接数据,网址:http://list.secoo.com/bags/30-0-0-0-0-1-0-0-1-10-0-0.shtml#pageTitle

代码如下:

import requests

from bs4 import BeautifulSoup

import chardet

import re

import random

USER_AGENTS = [

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36 OPR/26.0.1656.60',

'Opera/8.0 (Windows NT 5.1; U; en)',

'Mozilla/5.0 (Windows NT 5.1; U; en; rv:1.8.1) Gecko/20061208 Firefox/2.0.0 Opera 9.50',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; en) Opera 9.50',

'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:34.0) Gecko/20100101 Firefox/34.0',

'Mozilla/5.0 (X11; U; Linux x86_64; zh-CN; rv:1.9.2.10) Gecko/20100922 Ubuntu/10.10 (maverick) Firefox/3.6.10',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/534.57.2 (KHTML, like Gecko) Version/5.1.7 Safari/534.57.2',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.71 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.11 (KHTML, like Gecko) Chrome/23.0.1271.64 Safari/537.11',

'Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.16 (KHTML, like Gecko) Chrome/10.0.648.133 Safari/534.16',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.11 TaoBrowser/2.0 Safari/536.11',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/21.0.1180.71 Safari/537.1 LBBROWSER',

'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E; LBBROWSER)',

'Mozilla/5.0 (Windows NT 5.1) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.84 Safari/535.11 SE 2.X MetaSr 1.0',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; SV1; QQDownload 732; .NET4.0C; .NET4.0E; SE 2.X MetaSr 1.0)',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/38.0.2125.122 UBrowser/4.0.3214.0 Safari/537.36'

]

url = 'http://list.secoo.com/bags/30-0-0-0-0-1-0-0-1-10-0-0.shtml#pageTitle'

random_user_agent = random.choice(USER_AGENTS)

headers = {

'user-agent': random_user_agent}

response = requests.get(url=url, headers=headers)

response.encoding = chardet.detect(response.content)['encoding']

text = response.text

soup = BeautifulSoup(text, 'lxml')

#print(soup)

new_url_list = soup.find_all('a',href=re.compile('source=list'))

for i in new_url_list:

print(i)

print(len(new_url_list))

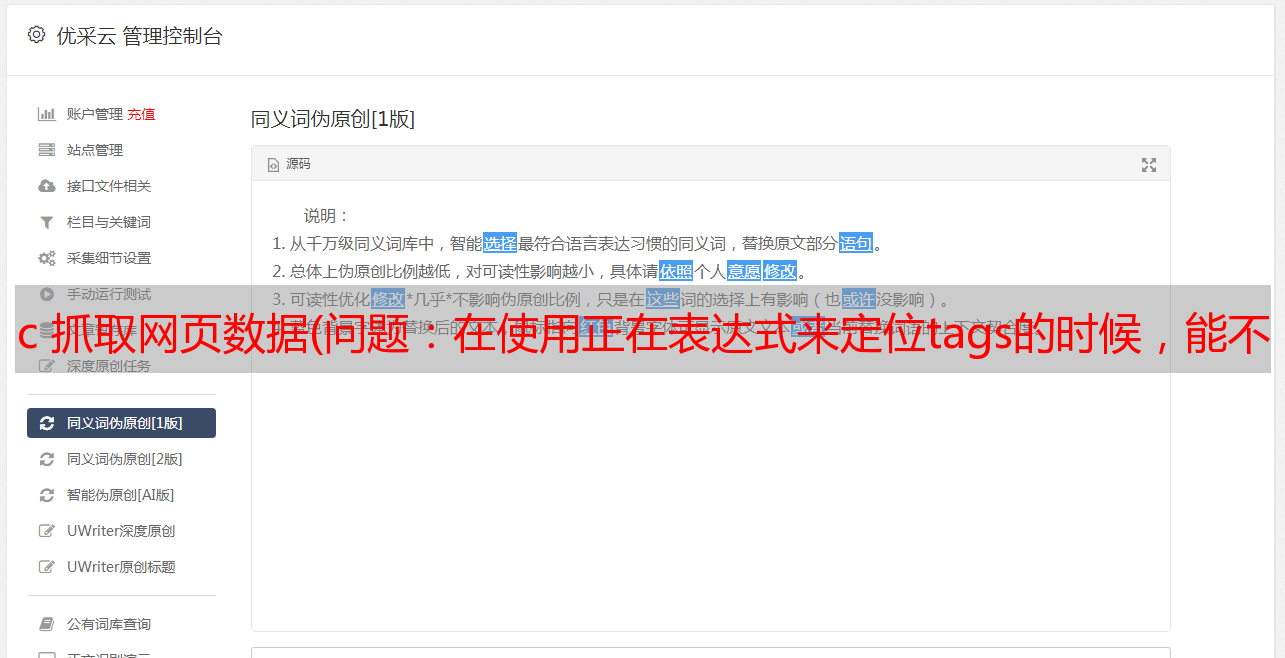

我们在这里使用

new_url_list = soup.find_all('a',href=pile('source=list')),通过正则识别出有的href中有属性,有的收录'source=list'

定位标签,结果如下

一共爬取了108行,其中我们想要的真实数据是40行。

我们发现id中收录name的数据就是我们想要的数据。那么这里可以添加一个简单的正则表达式来准确定位,

如下:

new_url_list = soup.find_all('a',href=re.compile('source=list'), id=re.compile('name'))

代码:

import requests

from bs4 import BeautifulSoup

import chardet

import re

import random

USER_AGENTS = [

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36 OPR/26.0.1656.60',

'Opera/8.0 (Windows NT 5.1; U; en)',

'Mozilla/5.0 (Windows NT 5.1; U; en; rv:1.8.1) Gecko/20061208 Firefox/2.0.0 Opera 9.50',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; en) Opera 9.50',

'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:34.0) Gecko/20100101 Firefox/34.0',

'Mozilla/5.0 (X11; U; Linux x86_64; zh-CN; rv:1.9.2.10) Gecko/20100922 Ubuntu/10.10 (maverick) Firefox/3.6.10',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/534.57.2 (KHTML, like Gecko) Version/5.1.7 Safari/534.57.2',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.71 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.11 (KHTML, like Gecko) Chrome/23.0.1271.64 Safari/537.11',

'Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.16 (KHTML, like Gecko) Chrome/10.0.648.133 Safari/534.16',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.11 TaoBrowser/2.0 Safari/536.11',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/21.0.1180.71 Safari/537.1 LBBROWSER',

'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E; LBBROWSER)',

'Mozilla/5.0 (Windows NT 5.1) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.84 Safari/535.11 SE 2.X MetaSr 1.0',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; SV1; QQDownload 732; .NET4.0C; .NET4.0E; SE 2.X MetaSr 1.0)',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/38.0.2125.122 UBrowser/4.0.3214.0 Safari/537.36'

]

url = 'http://list.secoo.com/bags/30-0-0-0-0-1-0-0-1-10-0-0.shtml#pageTitle'

random_user_agent = random.choice(USER_AGENTS)

headers = {

'user-agent': random_user_agent}

response = requests.get(url=url, headers=headers)

response.encoding = chardet.detect(response.content)['encoding']

text = response.text

soup = BeautifulSoup(text, 'lxml')

#print(soup)

new_url_list = soup.find_all('a',href=re.compile('source=list'), id=re.compile('name'))

for i in new_url_list:

print(i)

print(len(new_url_list))

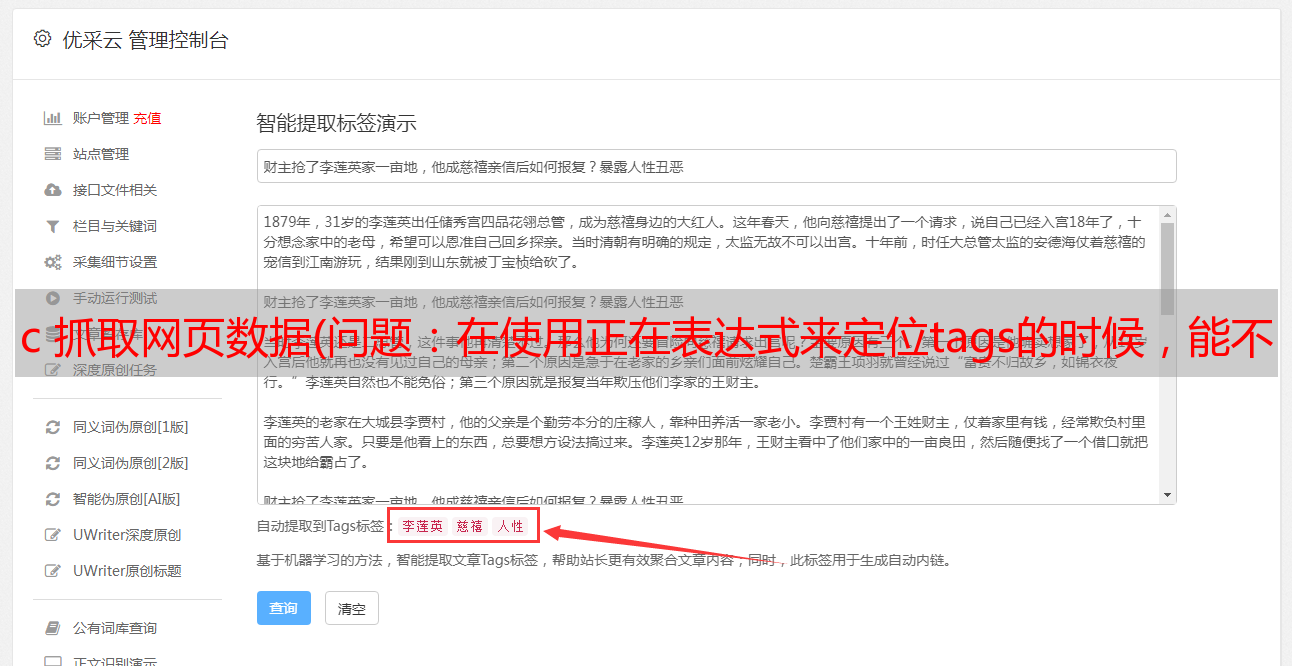

结果如下:

40 个网络链接,我们所需要的。

知识点:

find_all()方法搜索当前标签的所有标签子节点,判断是否满足过滤条件

html = """

The Dormouse's story

<p class="title">The Dormouse's story

Once upon a time there were three little sisters; and their names were

Lacie and

and they lived at the bottom of a well.

...

"""</p>

①名称参数

name参数可以找到所有名为name的标签,字符串对象会被自动忽略

A.Pass 字符串

#传入字符串查找p标签

print(soup.find_all('b'))

#运行结果:[The Dormouse's story]

B.传递正则表达式

如果传入正则表达式作为参数,Beautiful Soup 会通过正则表达式 match() 匹配内容。下面的例子查找所有以b开头的标签,这意味着标签和标签都应该找到

import re

for tag in soup.find_all(re.compile('^b')):

print(tag.name)

#运行结果:

# body

# b

C.上传列表

content = soup.find_all(["a","b"])

print(content)

"""

#运行结果:

[The Dormouse's story, , Lacie, Tillie]

"""

D.通过真

True 可以匹配任意值,以下代码查找所有标签,但不返回字符串节点

E.传输方式

如果没有合适的过滤器,也可以定义一个方法,该方法只接受一个元素参数[4]如果该方法返回True,则表示当前元素匹配并找到,否则返回False

以下方法验证当前元素,如果它收录类属性但不收录 id 属性,则返回 True:

#定义函数,Tag的属性只有id

def has_id(tag):

return tag.has_attr('id')

content = soup.find_all(has_id)

print(content)

"""

运行结果:

"""

②关键字参数

注意:如果指定名称的参数不是用于搜索的内置参数名称,则该参数将作为指定名称标签的属性进行搜索。如果收录名称为 id 的参数,Beautiful Soup 会搜索每个标签的“id”属性

print(soup.find_all(id="link3"))

# 运行结果:[Tillie]

如果传递了href参数,Beautiful Soup会搜索每个标签的“href”属性

import re

print(soup.find_all(href=re.compile("lacie")))

# 运行结果:[Lacie]

使用多个指定名称的参数同时过滤一个标签的多个属性

import re

print(soup.find_all(href=re.compile("lacie"),id="link2"))

# 运行结果:[Lacie]

使用class过滤时,class不是python的关键字,加下划线即可

print(soup.find_all("a",class_="sister"))

③文本参数

文本参数可用于搜索文档中的字符串内容。和name参数的可选值一样,text参数接受string、regular expression、list、True

#传入字符串

print(soup.find_all(text="Lacie"))

# 运行结果:['Lacie']

#传入列表

print(soup.find_all(text=["Lacie","Tillie"]))

# 运行结果:['Lacie', 'Tillie']

#传入正则表达式

import re

print(soup.find_all(text=re.compile("Dormouse")))

# 运行结果:["The Dormouse's story", "The Dormouse's story"]

④限制参数

find_all()参数在查询量大的时候可能会比较慢,所以我们引入了limit函数,可以限制返回的结果

print(soup.find_all("a"))

"""

运行结果:

"""

print(soup.find_all("a",limit=2))

"""

运行结果:

[, Lacie]

"""

⑤recursive参数

recursive翻译为递归循环的意思

当调用标签的find_all()方法时,Beautiful Soup 会检索当前标签的所有后代节点。如果只想搜索标签的直接子节点,可以使用参数 recursive=False 。

print(soup.html.find_all("title"))

# 运行结果:[The Dormouse's story]

print(soup.html.find_all("title",recursive=False))

# 运行结果:[]