前程无忧网站内容(前程无忧网站,职位信息一步到位函数爬取!!!!!)

优采云 发布时间: 2021-12-09 16:19前程无忧网站内容(前程无忧网站,职位信息一步到位函数爬取!!!!!)

网站,招聘信息一步到位!!!真的一步到位

又到毕业季了,阿爸阿爸。那么对于您要去的地方有什么好的计划呢?最好爬取一些仓位的数据来分析分析。看大佬网站,要么资料少,要么很难得到你想要的。不过,我一眼就看到了51job Worry的网站。这个网站数据量大,资料齐全。不算太差。

1、网页分析

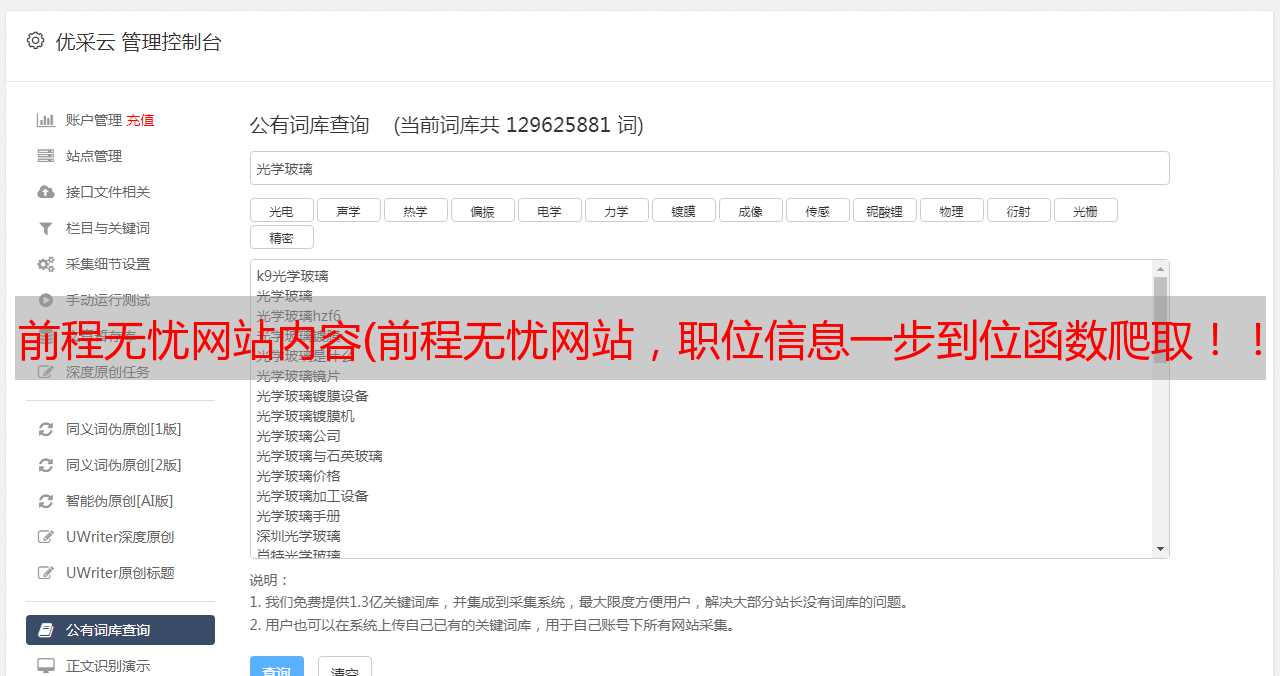

先搜索少量数据,我们要获取总页数,即圈出的东西,然后去详情页获取更多数据:

即帧中的所有数据

2、那就废话少说,直接上代码:

def word(word=None):

import requests

from lxml import etree

import pandas as pd

from requests.auth import HTTPBasicAuth

import re

from lxml import etree

import time

headers ={

}

headers[ 'User-Agent'] = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.149 Safari/537.36'

b='adv=adsnew%3D1%26%7C%26adsresume%3D1%26%7C%26adsfrom%3Dhttps%253A%252F%252Fwww.baidu.com%252Fbaidu.php%253Fsc.Ks00000EAMrnlPLIybYNddmhQxV6IThZIe70Sr8VGpPGAfEzIiBE3N8p4LE2LEWCAHyzBdESmMZ-sGbi0sHVd6dhuzz4rfykOQ6N80T4NJgVb2RtQINK4AnOhKIekZ45BUl7eB4IbpXpwye5vkWgttCVOuz2P80--SJceSvd9MaxBTyepp5o5WQo-14LrX_R3tZ_9JO3m-wU6DsC4Er88NrA9MDF.7R_NR2Ar5Od66CHnsGtVdXNdlc2D1n2xx81IZ76Y_u2qS1x3dqyT8P9MqOOgujSOODlxdlPqKMWSxKSgqjlSzOFqtZOmzUlZlS5S8QqxZtVAOtItISiel5SKkOeZwSEHNuxSLsfxzcEqolQOY2LOqWq8WvLxVOgVlqoAzAVPBxZub88zuQCUS1FIEtR_4n-LiMGePOBGyAp7WFEePl6.U1Y10ZDqdIjA80Kspynqn0KsTv-MUWY1njNBujuhnvwBm17BnvcLmyD1mhRYnvubuW01PvDdnsKY5IgfkoBe3fKGUHYznWR0u1dEuZCk0ZNG5yF9pywd0ZKGujYzPsKWpyfqnWfY0AdY5HDsnH-xnH0kPdtznjmzg1Dknjfkg1nsnjuxn1msnfKopHYs0ZFY5HD3P6K-pyfqn104P1KxnHfdnNtznHDkndt1nj6Yn7t1njb4PNt1nj6zndt1njTkPsKBpHYkPH9xnW0Yg1ckPsKVm1Yknj0kg1D3Pjbknjm4rHNxrHczn1T3PWm3P-tYn7tknjFxn0KkTA-b5H00TyPGujYs0ZFMIA7M5H00mycqn7ts0ANzu1Ys0ZKs5H00UMus5H08nj0snj0snj00Ugws5H00uAwETjYs0ZFJ5H00uANv5gKW0AuY5H00TA6qn0KET1Ys0AFL5HDs0A4Y5H00TLCq0A71gv-bm1dsTzdMXh93XfKGuAnqiD4K0ZwdT1Ykn1f3nHbvrjTsPWD1PW6Lnjmvn6Kzug7Y5HDdrjnvPjDYrHD3nHf0Tv-b5yPBPH63ujuBnj0sPjT3Pvf0mLPV5HbvPjPDwWIjnYuarHIjnWb0mynqnfKYIgfqnfKsUWYs0Z7VIjYs0Z7VT1Ys0ZGY5H00UyPxuMFEUHYsg1Kxn7tsg1nsnH7xn0Kbmy4dmhNxTAk9Uh-bT1Ysg1Kxn7tznjRznHKxPjc1PjnvnNts0ZK9I7qhUA7M5H00uAPGujYs0ANYpyfqQHD0mgPsmvnqn0KdTA-8mvnqn0KkUymqn0KhmLNY5H00pgPWUjYs0ZGsUZN15H00mywhUA7M5H60UAuW5H00uAPWujY0mhwGujdKPjIjfH-AP1DdwjwDwRcYn1uDPRPafbFAPj0kPWPDwfKBIjYs0AqY5H00ULFsIjYsc10Wc10Wnansc108nj0snj0sc10Wc100TNqv5H08rH9xna3sn7tsQW0sg108nWIxna3sPdtsQWnY0AN3IjYs0APzm1YzPHDkn6%2526word%253D%2525E6%25258B%25259B%2525E8%252581%252598%2526ck%253D2539.10.112.366.290.220.175.400%2526shh%253Dwww.baidu.com%2526us%253D1.26594.3.0.2.929.0%2526bc%253D110101%26%7C%26adsnum%3D2198971; guid=80fce931b0d504e6510c34969654cfd2; partner=www_baidu_com; nsearch=jobarea%3D%26%7C%26ord_field%3D%26%7C%26recentSearch0%3D%26%7C%26recentSearch1%3D%26%7C%26recentSearch2%3D%26%7C%26recentSearch3%3D%26%7C%26recentSearch4%3D%26%7C%26collapse_expansion%3D; search=jobarea%7E%60000000%7C%21ord_field%7E%600%7C%21recentSearch0%7E%60000000%A1%FB%A1%FA000000%A1%FB%A1%FA0000%A1%FB%A1%FA00%A1%FB%A1%FA99%A1%FB%A1%FA%A1%FB%A1%FA99%A1%FB%A1%FA99%A1%FB%A1%FA99%A1%FB%A1%FA99%A1%FB%A1%FA9%A1%FB%A1%FA99%A1%FB%A1%FA%A1%FB%A1%FA0%A1%FB%A1%FA%D0%C2%C3%BD%CC%E5%C4%DA%C8%DD%D4%CB%D3%AA%A1%FB%A1%FA2%A1%FB%A1%FA1%7C%21recentSearch1%7E%60200200%A1%FB%A1%FA000000%A1%FB%A1%FA0000%A1%FB%A1%FA00%A1%FB%A1%FA99%A1%FB%A1%FA%A1%FB%A1%FA99%A1%FB%A1%FA99%A1%FB%A1%FA99%A1%FB%A1%FA99%A1%FB%A1%FA9%A1%FB%A1%FA99%A1%FB%A1%FA%A1%FB%A1%FA0%A1%FB%A1%FA%C3%BD%CC%E5%CA%FD%BE%DD%B7%D6%CE%F6%A1%FB%A1%FA2%A1%FB%A1%FA1%7C%21; m_search=keyword%3D%E6%96%B0%E5%AA%92%E4%BD%93%E5%86%85%E5%AE%B9%E8%BF%90%E8%90%A5%26%7C%26areacode%3D000000'

line=b.split(';')

cookie={

}

for i in line:

key,value = i.split('=',1)

cookie[key] = value###得到cookies

title=[]#标题

num_1=[]#字段1

num_2=[]#字段2

num_3=[]#字段3

num_4=[]#字段4

num_5=[]#字段5

num_6=[]#字段6

num_7=[]#字段7

num_al=[]#字段8、9、10

url='https://search.51job.com/list/000000,000000,0000,00,9,99,'+word+',2,1.html?lang=c&stype=&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&providesalary=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare='###首先需要得到总页码

response=requests.get(url,headers=headers)

html=etree.HTML(response.text)

pag=re.sub('\xa0|/|\n|\r|\s','',html.xpath('//*[@id="resultList"]/div[2]/div[5]/text()')[2]##处理得到的页码

for i in list(range(1,int(pag)+1)):

url='https://search.51job.com/list/000000,000000,0000,00,9,99,'+word+',2,'+str(i)+'.html?lang=c&stype=&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&providesalary=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare='###网页的构建

response=requests.get(url,headers=headers)

response.encoding='gbk'

res=response.text

html = etree.HTML(res)

url_son=[]

for m in html.xpath('//p/span/a[@target="_blank"]/@href'):

url_son=url_son+re.findall('https://jobs.51job.com.*?\?s=01&t=0',m)###进行网址的筛选,因为有的网站是坏的,但是好的网站会有规律。

for j in range(len(url_son)):

res_son=requests.get(url_son[j],headers=headers)

res_son.encoding='gbk'

html_son = etree.HTML(res_son.text)

title.append(''.join(html_son.xpath('//div[@class="cn"]/h1/text()')))

num_1.append(''.join(html_son.xpath('//p[@class="cname"]/a/@title')))

num_2.append(''.join(html_son.xpath('//div[@class="cn"]/strong/text()')))

num_3.append(''.join(html_son.xpath('//div[@class="cn"]/p/@title')))

num_4.append(''.join(html_son.xpath('//span[@class="sp4"]/text()')))

num_5.append(''.join(html_son.xpath('//div[@class="bmsg job_msg inbox"]/p/text()')))

num_6.append(''.join(html_son.xpath('//div[@class="bmsg inbox"]/p/text()')))

num_7.append(''.join(html_son.xpath('//div[@class="tmsg inbox"]/text()')))

num_al.append(html_son.xpath('//div[@class="com_tag"]/p/@title'))

print ('第'+str(i)+'页')

time.sleep(5)

for i in range(len(num_3)):

num_3[i]=re.sub('\xa0','',num_3[i])

columns={

'标题':title,'字段1':num_1,'字段2':num_2,'字段3':num_3,'字段4':num_4,'字段5':num_5,'字段6':num_6,'字段7':num_7,'字段合':num_al}

df=pd.DataFrame(columns)

num_alnew=[]

for i in range(len(df['字段合'])):

num_alnew.append('|'.join(df['字段合'][i]))

df['新合']=num_alnew

data=df.drop(['字段合'],axis=1)

data['字段8']=data['新合'].str.split('|',expand=True)[0]

data['字段9']=data['新合'].str.split('|',expand=True)[1]

data['字段10']=data['新合'].str.split('|',expand=True)[2]

data_finall=data.drop(['新合'],axis=1)###以上皆为数据的处理

return data_finall.to_excel('C:\\Users\\dell\\Desktop\\'+word+'.xlsx',encoding='utf_8_sig')###导出数据注意修改格式

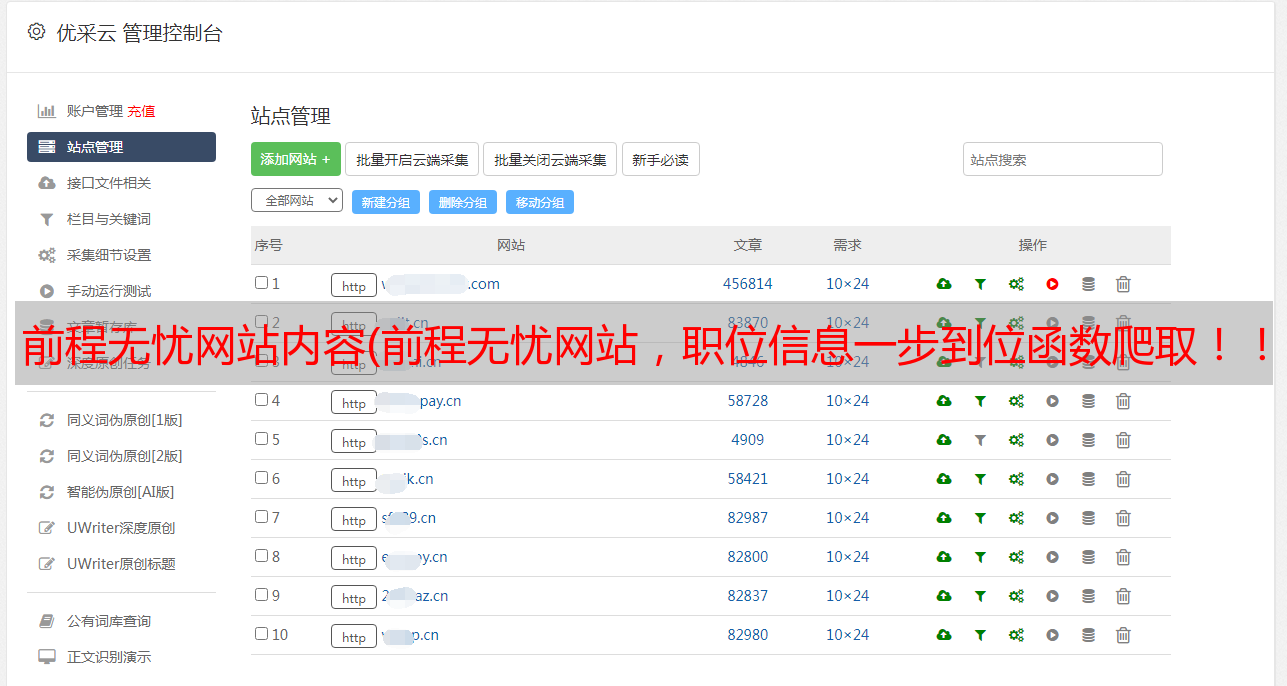

开始攀登

word('网络安全专员')

得到答案:

得到的结果并不完美。

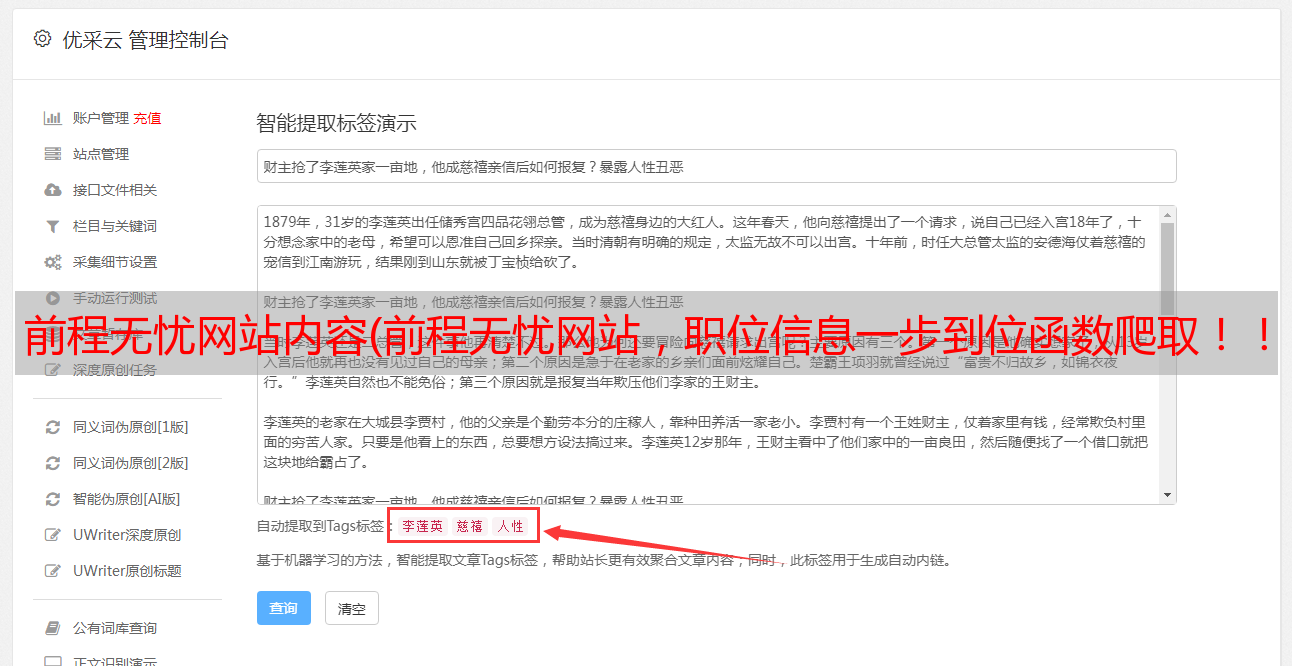

3、总结

在很多错误中完善自己,然后继续优化代码,这样你就会越来越好。

注:本次采集的数据仅供学习研究使用