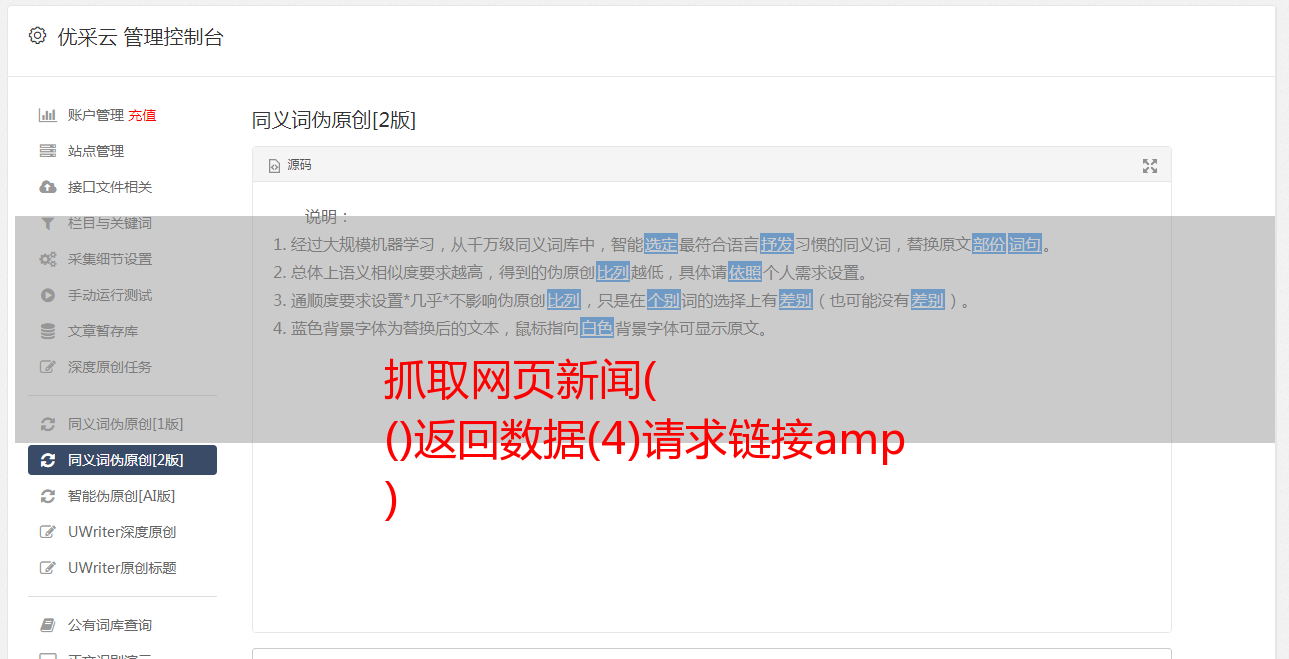

抓取网页新闻( ()返回数据(4)请求链接amp )

优采云 发布时间: 2021-11-21 16:23抓取网页新闻(

()返回数据(4)请求链接amp

)

(3)返回数据:

(4)请求链接

机器人&entity_type=post&ts=42&_=39

分析:ts和下面是时间戳格式的,不需要,entity_type=post是必须的,可变参数是page

(4)列表页的json数据,id为详情页链接所需的符号

(5)详情页数据

获取内容:

字段:标题、作者、日期、简介、标签、内容

查看源码,数据都在var props收录的script标签中

(6)常规获取并转换成普通json数据(原因:json文件可以更好的获取某个字段的内容,如果单纯使用常规拦截的话,不容易获取或者直接获取less比)

源代码:

# -*- coding: utf-8 -*-

# @Time : 2018/7/28 17:13

# @Author : 蛇崽

# @Email : 643435675@QQ.com 1532773314218

# @File : 36kespider.py

import json

import re

import scrapy

import time

class ke36Spider(scrapy.Spider):

name = 'ke36'

allowed_domains = ['www.36kr.com']

start_urls = ['https://36kr.com/']

def parse(self, response):

print('start parse ------------------------- ')

word = '机器人'

t = time.time()

page = '1'

print('t',t)

for page in range(1,200):

burl = 'http://36kr.com/api//search/entity-search?page={}&per_page=40&keyword={}&entity_type=post'.format(page,word)

yield scrapy.Request(burl,callback=self.parse_list,dont_filter=True)

def parse_list(self,response):

res = response.body.decode('utf-8')

# print(res)

jdata = json.loads(res)

code = jdata['code']

timestamp = jdata['timestamp']

timestamp_rt = jdata['timestamp_rt']

items = jdata['data']['items']

m_id = items[0]['id']

for item in items:

m_id = item['id']

b_url = 'http://36kr.com/p/{}.html'.format(str(m_id))

# b_url = 'http://36kr.com/p/5137751.html'

yield scrapy.Request(b_url,callback=self.parse_detail,dont_filter=True)

def parse_detail(self,response):

res = response.body.decode('utf-8')

content = re.findall(r'var props=(.*?)',res)

temstr = content[0]

minfo = re.findall('\"detailArticle\|post\"\:(.*?)"hotPostsOf30',temstr)[0]

print('minfo ----------------------------- ')

minfo = minfo.rstrip(',')

jdata = json.loads(minfo)

print('j'*40)

published_at = jdata['published_at']

username = jdata['user']['name']

title = jdata['user']['title']

extraction_tags = jdata['extraction_tags']

content = jdata['content']

print(published_at,username,title,extraction_tags)

print('*'*50)

print(content)